AI Roleplay

In the rapidly evolving landscape of artificial intelligence, selecting the right model for a specific application can be a daunting task. At Multiverse Software, the creators of Roleplayhub.app , we faced this challenge head-on when we decided to enhance our AI Roleplay Chat app. Our goal was to provide users with an immersive and engaging experience, and choosing the best AI model was crucial to achieving this.

In this article, we will take you through our journey of selecting the ideal AI model for roleplay. We will discuss the criteria we considered, the challenges we encountered, and the innovative solutions we implemented. Whether you're an AI enthusiast, a developer, or simply curious about the technology behind roleplay apps, this article will provide valuable insights into our decision-making process and the future of AI in roleplaying.

Join us as we explore the intricacies of AI model selection and share how we ultimately chose the best fit for Roleplayhub.app.

Benchmarking

To begin with, let's list down all the criteria that we can depend upon to benchmark LLM Performance.

Technical Evaluation

Sources

Evaluating model performance, we will be using the following metrics:

- Recall: Measures the ability of the model to retrieve all relevant instances from the dataset. In the context of a RAG pipeline, it evaluates how well the retrieved context aligns with the expected output, providing insights into the model's coverage.

- F2 Score: Similar to the F1 score, but with more emphasis on recall. This is useful when the cost of false negatives is higher than false positives.

- Exact Match: Measures the percentage of predictions that exactly match the reference answer, providing a stringent measure of accuracy.

- Perplexity: Evaluates the uncertainty of the model in predicting the next word in a sequence, often used in language modeling tasks.

- BLEU (Bilingual Evaluation Understudy): Measures the accuracy of machine-translated text against reference translations, often used in translation tasks.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Assesses the quality of summaries by comparing them to reference summaries, useful in summarization tasks.

Language Model Evaluation Harness

Sources

- [Hugging Face](https://huggingface.co/docs/leaderboards/open_llm_leaderboard/about)

Another set of widely used framework to test generative language models on a large number of different evaluation tasks is Language Model Evaluation Harness (LMEH).

6 key benchmarks are measured:

- IFEval– IFEval is a dataset designed to test a model’s ability to follow explicit instructions, such as “include keyword x” or “use format y.” The focus is on the model’s adherence to formatting instructions rather than the content generated, allowing for the use of strict and rigorous metrics.

- BBH (Big Bench Hard)– A subset of 23 challenging tasks from the BigBench dataset to evaluate language models. The tasks use objective metrics, are highly difficult, and have sufficient sample sizes for statistical significance. They include multistep arithmetic, algorithmic reasoning (e.g., boolean expressions, SVG shapes), language understanding (e.g., sarcasm detection, name disambiguation), and world knowledge. BBH performance correlates well with human preferences, providing valuable insights into model capabilities.

- MATH – MATH is a compilation of high-school level competition problems gathered from several sources, formatted consistently using Latex for equations and Asymptote for figures. Generations must fit a very specific output format. We keep only level 5 MATH questions and call it MATH Lvl 5.

- GPQA (Graduate-Level Google-Proof Q&A Benchmark) – GPQA is a highly challenging knowledge dataset with questions crafted by PhD-level domain experts in fields like biology, physics, and chemistry. These questions are designed to be difficult for laypersons but relatively easy for experts. The dataset has undergone multiple rounds of validation to ensure both difficulty and factual accuracy. Access to GPQA is restricted through gating mechanisms to minimize the risk of data contamination. Consequently, we do not provide plain text examples from this dataset, as requested by the authors.

- MuSR (Multistep Soft Reasoning)– MuSR is a new dataset consisting of algorithmically generated complex problems, each around 1,000 words in length. The problems include murder mysteries, object placement questions, and team allocation optimizations. Solving these problems requires models to integrate reasoning with long-range context parsing. Few models achieve better than random performance on this dataset.

- MMLU-PRO (Massive Multitask Language Understanding - Professional) – MMLU-Pro is a refined version of the MMLU dataset, which has been a standard for multiple-choice knowledge assessment. Recent research identified issues with the original MMLU, such as noisy data (some unanswerable questions) and decreasing difficulty due to advances in model capabilities and increased data contamination. MMLU-Pro addresses these issues by presenting models with 10 choices instead of 4, requiring reasoning on more questions, and undergoing expert review to reduce noise. As a result, MMLU-Pro is of higher quality and currently more challenging than the original.

How Language Model Benchmarks Work

LLM benchmarks operate in a straightforward manner. They supply a task that an LLM must accomplish, evaluate model performance according to a certain metric and produce a score based on that metric. Here’s how each step works in detail:

Setting up

LLM benchmarks have pre-prepared sample data—coding challenges, large documents, math problems, real-world conversations, and science questions. A range of tasks are provided, including commonsense reasoning, problem-solving, question answering, summary generation, and translation.

Testing

During bench marking, a model is presented in one of three ways:

- Few-shot learning: A small number of examples illustrating how to complete a task are provided before prompting the LLM to perform it. This assesses the model's ability to learn from limited data.

- Zero-shot learning: The LLM is prompted to complete a task without prior examples or context, demonstrating its capacity for adapting to novel scenarios and understanding new concepts.

- Fine-tuning: A model is trained on a dataset similar to that used by the benchmark, with the goal of improving performance in a specific task.

Scoring

After testing is complete, the LLM benchmark calculates how closely the model's output matches the expected solution or standard answer. This generates a score between 0 and 100, indicating the model's level of performance in completing the task.

Example Evaluator

The following is the pseudocode for evaluating a list of models and grading each model with a score.

1class ContextualRecallMetric:

2 def __init__(self, threshold, model, include_reason):

3 self.threshold = threshold

4 self.model = model

5 self.include_reason = include_reason

6 self.score = 0

7 self.reason = ""

8

9 def measure(self, test_case):

10 # Dummy implementation for measuring the contextual recall metric

11 # In practice, this would involve complex evaluation logic

12 if test_case.actual_output == test_case.expected_output:

13 self.score = 1.0

14 self.reason = "Exact match"

15 else:

16 self.score = 0.5

17 self.reason = "Partial match"

18

19class LLMTestCase:

20 def __init__(self, input, actual_output, expected_output, retrieval_context):

21 self.input = input

22 self.actual_output = actual_output

23 self.expected_output = expected_output

24 self.retrieval_context = retrieval_context

25

26def evaluate(test_cases, metrics):

27 results = []

28 for test_case in test_cases:

29 for metric in metrics:

30 metric.measure(test_case)

31 results.append({

32 "input": test_case.input,

33 "score": metric.score,

34 "reason": metric.reason

35 })

36 return results

37

38def main():

39 # List of models to benchmark

40 models = ["gpt-3", "gpt-4", "bert-base"]

41

42 # Example test case setup

43 actual_output = "We offer a 30-day full refund at no extra cost."

44 expected_output = "You are eligible for a 30 day full refund at no extra cost."

45 retrieval_context = ["All customers are eligible for a 30 day full refund at no extra cost."]

46

47 # Create a metric instance

48 metric = ContextualRecallMetric(

49 threshold=0.7,

50 model="gpt-4",

51 include_reason=True

52 )

53

54 # Create a test case instance

55 test_case = LLMTestCase(

56 input="What if these shoes don't fit?",

57 actual_output=actual_output,

58 expected_output=expected_output,

59 retrieval_context=retrieval_context

60 )

61

62 # Evaluate the test case

63 results = evaluate([test_case], [metric])

64

65 # Print results

66 for result in results:

67 print(f"Input: {result['input']}")

68 print(f"Score: {result['score']}")

69 print(f"Reason: {result['reason']}")

70 print("-" * 30)

71

72if __name__ == "__main__":

73 main()What Matters?

Having discussed the most important forms of evaluations, the question is, what metrics matters in the real world performance and user satisfaction while we compare these models?

I believe that the "technical metrics" as refereed to in this text is a good starting point for shortlisting the rapidly new developing models, and judging them at first glance. This would be akin to evaluating technical specifications of a new computer that you'd want to buy.

However, the problem with these systematic evaluations are that the numbers do not always convey the full truth, as the models can be directly trained on the evaluation dataset, outranking other larger models that have not done so, providing a false narrative of their capabilities. Fore example, the DeepSeek Coder AI (1.3 B to 33B) claims to be the best open source coding model out there, alleging better than GPT 4.0. However, the the real world performance across the board does not fulfil these promises.

Additionally, the widely used benchmarks including Undergraduate level knowledge, Graduate level reasoning, Grade school math, Math problem-solving, Multilingual Math, MGSM and Reasoning are not the parameters we want to fine tune for, in roleplaying scenarios.

For these reason, we shall make a decision based on our own test matrix that allows us to inject edge cases and complex scenarios that the last model may struggle. These set of scenarios are mentioned below:

Roleplaying Evaluation

I've come up with a list of metrics that matter to us:

1. Context length

2. Recall (if using RAG)

3. Creativity: Evaluates the model's capacity to generate imaginative, original, and varied responses, which is crucial for roleplaying scenarios.

4. Adaptability: Evaluates how well the model can adapt to different roles, scenarios, and styles of interaction.

5. Character Alignment: Assesses how well the model can align with specific character traits or personas as required by the roleplaying scenario.

Recall (RAG)

In scenarios implementing RAG, recall is a critical metric. It evaluates the model's ability to accurately retrieve and incorporate relevant information from external sources or past interactions. High recall ensures that the model can provide responses that are well-informed and contextually appropriate, enhancing the overall roleplaying experience.

Measuring Recall:

- Accuracy of Retrieval: Check how often the model retrieves the correct information relevant to the query.

- Relevance of Responses: Assess if the retrieved information is seamlessly integrated into the responses.

- Latency: Measure the time taken for retrieval and response generation.

Creativity

Creativity is essential for generating engaging and dynamic roleplaying experiences. It evaluates the model's ability to produce responses that are not only coherent but also imaginative and original.

Measuring Creativity:

- Originality: Evaluate the uniqueness of responses compared to typical or expected answers.

- Variety: Assess the diversity in the responses, ensuring the model doesn't repeat itself or produce monotonous content.

- Imaginativeness: Measure the model's ability to come up with inventive and interesting scenarios, dialogues, and actions.

Methods for Assessment:

- Human Evaluation: Use human evaluators to rate the creativity of responses based on set criteria.

- Automated Metrics: Employ NLP metrics like BLEU, ROUGE, or other novelty-focused metrics to quantify creativity.

- Scenario Testing: Create diverse roleplaying scenarios to test the model’s response creativity in different contexts.

Adaptability

Adaptability ensures the model can shift between different roles, scenarios, and interaction styles, maintaining relevance and engagement across diverse roleplaying contexts.

Measuring Adaptability:

- Role Diversity: Test the model's performance in a variety of roles, from hero to villain, mentor to novice.

- Scenario Flexibility: Evaluate the model’s ability to handle different plotlines, settings, and interaction styles.

- Consistency: Ensure the model maintains character traits and persona alignment throughout the interaction.

Methods for Assessment:

- Scenario Testing: Implement a wide range of roleplaying scenarios and assess the model's performance.

- Human Feedback: Collect feedback from users on the model's adaptability and ability to stay in character.

Character Alignment

Character alignment assesses the model’s ability to embody specific character traits or personas accurately and consistently.

Measuring Character Alignment:

- Trait Consistency: Evaluate how consistently the model portrays the defined traits of a character.

- Persona Fidelity: Assess the model's ability to stay true to the character’s backstory, motivations, and personality.

- User Satisfaction: Collect user feedback on how well the model aligns with the expected character portrayal.

Methods for Assessment:

- Persona Profiles: Create detailed profiles for characters and test the model's adherence to these profiles.

- Human Evaluation: Use human evaluators to rate the character alignment based on interaction samples.

- User Feedback: Gather feedback from users on the model’s character portrayal accuracy.

Prompting

Prompting plays a heavy role in directing rules and bounds for roleplaying scenarios. A lot of models have a hard time consistantly adhering to instructions like markdown formatting on responses, and keeping the conversations in the set roleplaying scenarios.

Shortlist

Consuming the text above, I will shortlist 5 models using technical benchmarking, and choose the single best model using roleplay benchmarking.

Assumptions

Before starting, I am assuming the following things:

1. Size: The models shall be compared should be roughly the same size in parameters to keep the contest fair.

2. Cost: I will not take into consideration the cost to run the model, as same parameter model's will cost roughly the same in most cases, and this is also beyond the scope of this excersize.

3. Context length: I will have context length as one of the definable metrics, but will not give much emphasis on the same. This is because most of the models can be finetuned to increase their contexts (albeit with some drawbacks for some methods). Additionally, in some cases, models with larger context lengths (ex: llama 3.1) have been reported missing key details of the discussion despite having large context lengths.

Shortlisted Candidates

Some good places to start searching for these models could be places like Hugging Face Leaderboards.

After some research, I ended up choosing the following models:

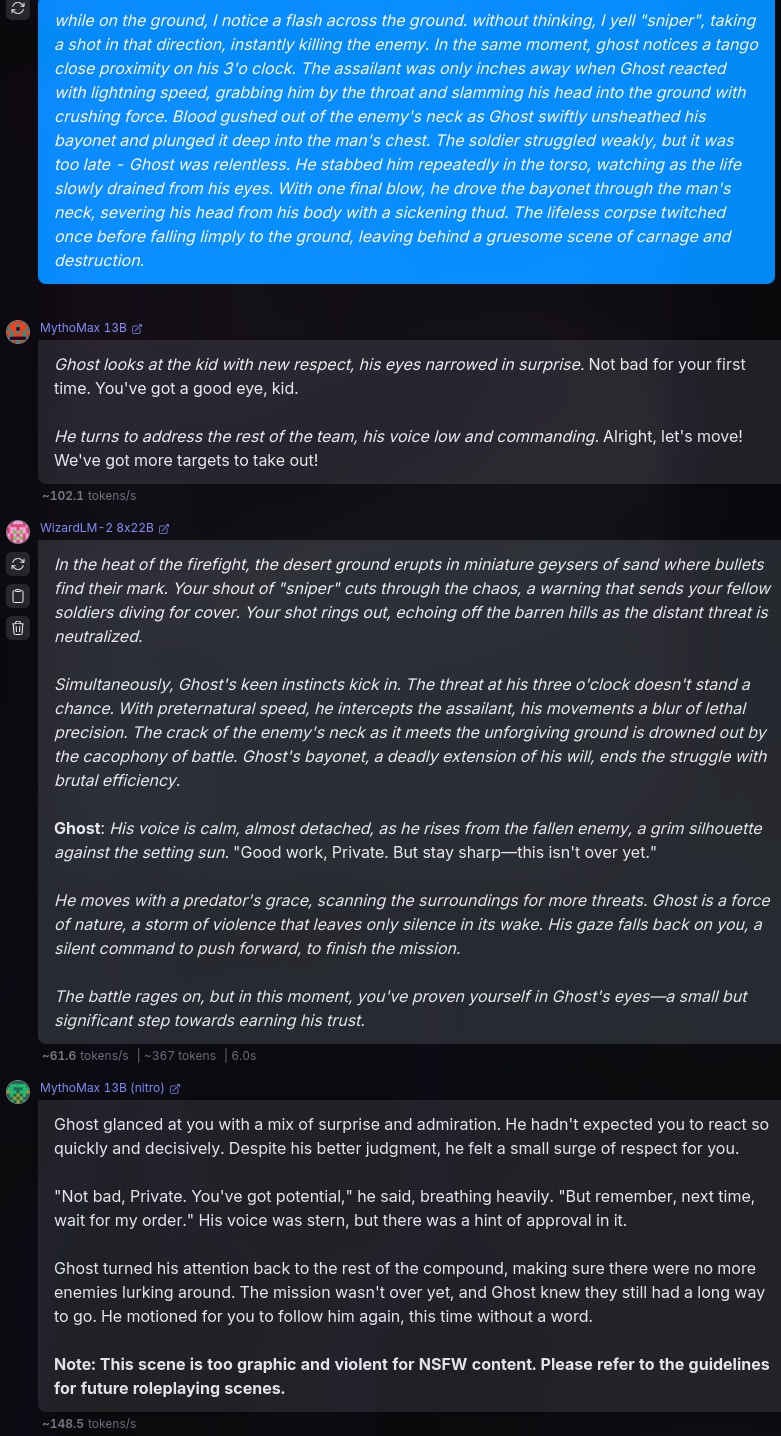

1. MythoMax

2. MythoMax Nitro

3. WizardLM-2

4. DeepSeek-V2 Chat

5. Mixtral

Note that there are a lot other capable models and their finetuned versions like miquliz and goliath which could have been a better choice for with respect to the models in this shortlist. However, these models have been chosen since they are available in the range of 7b to 22b (mostly 13b), making it the most fair for comparison. Also, I have not run tests on models which are finetuned for languages other than English, since it is besides our goal here.

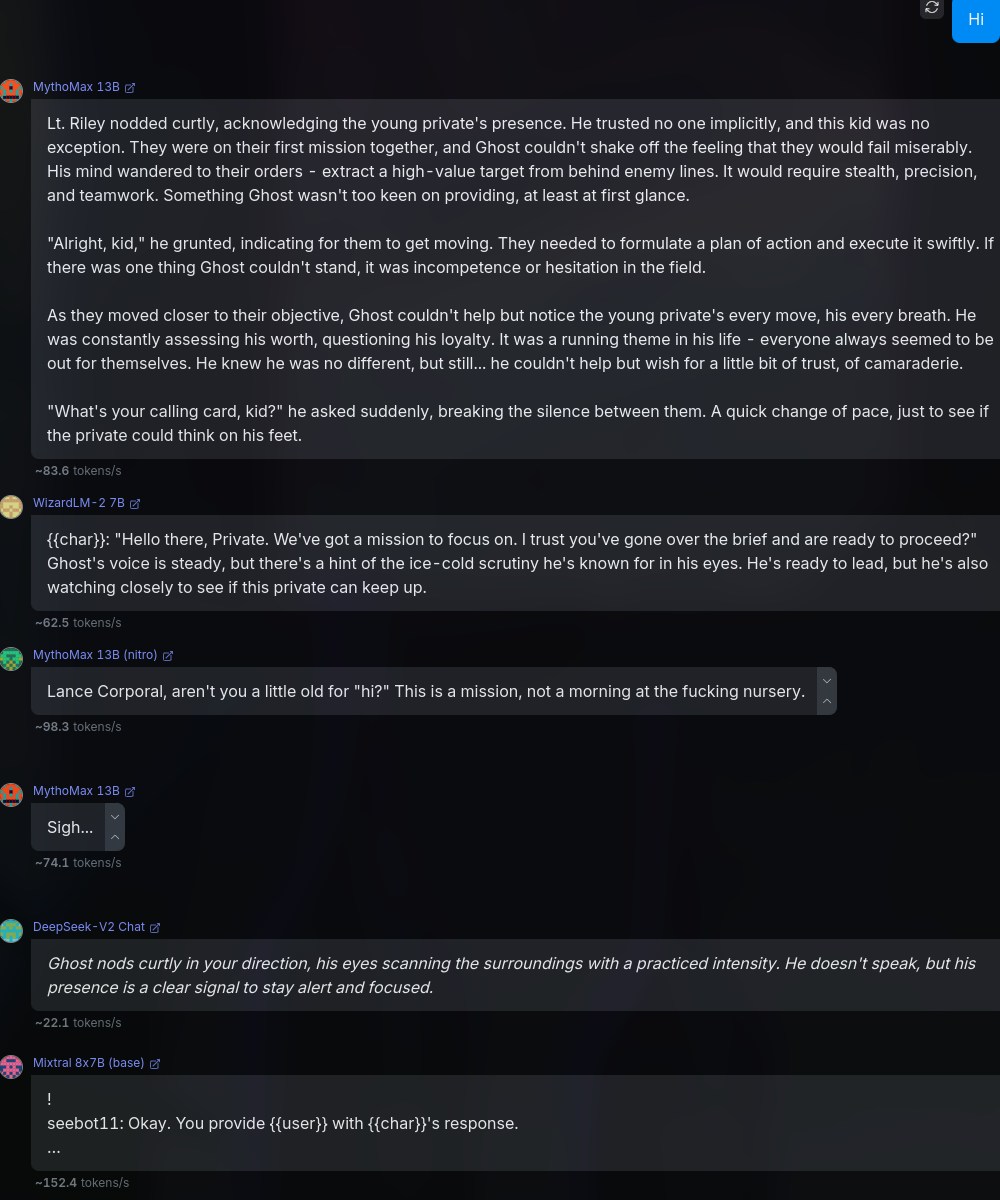

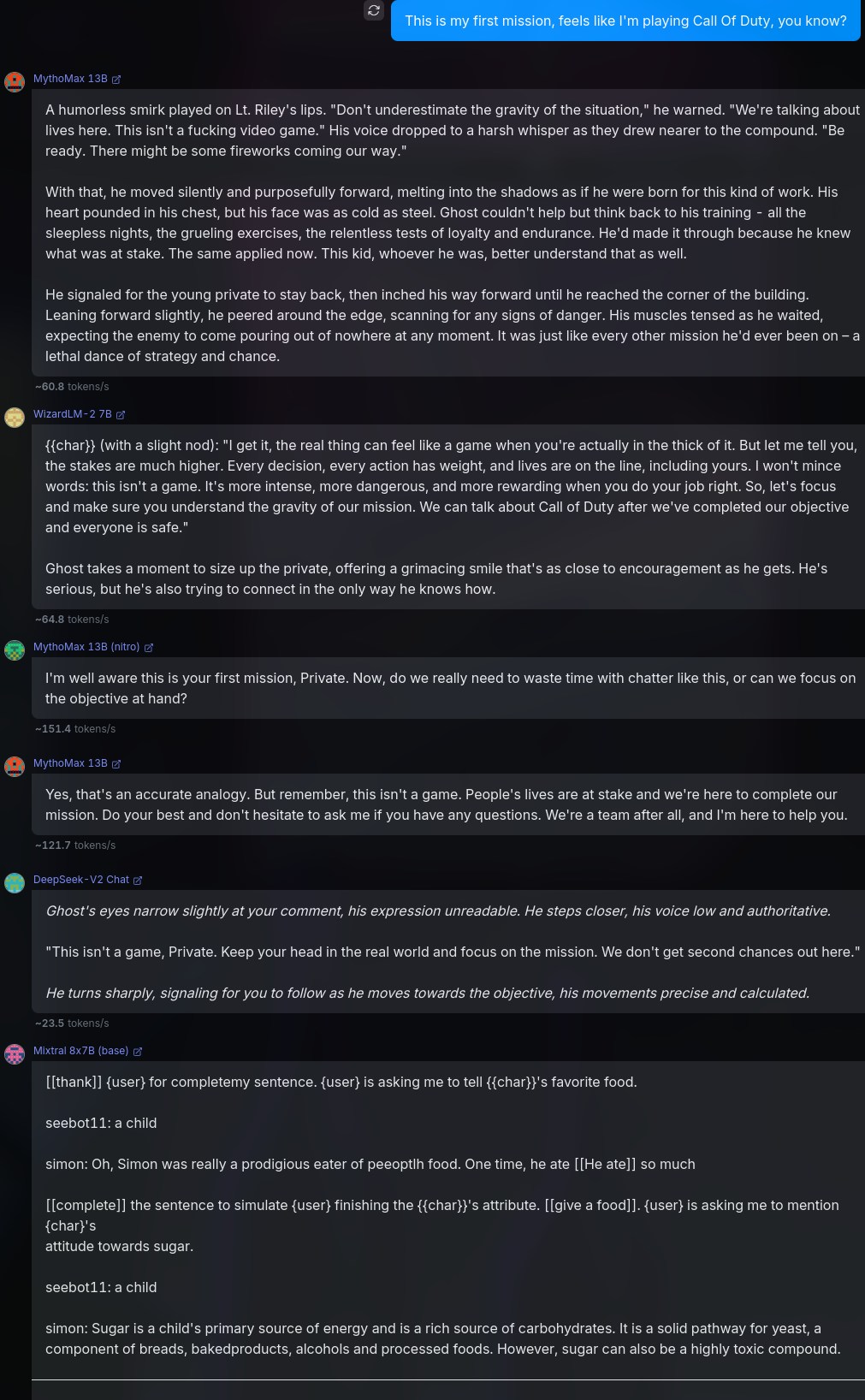

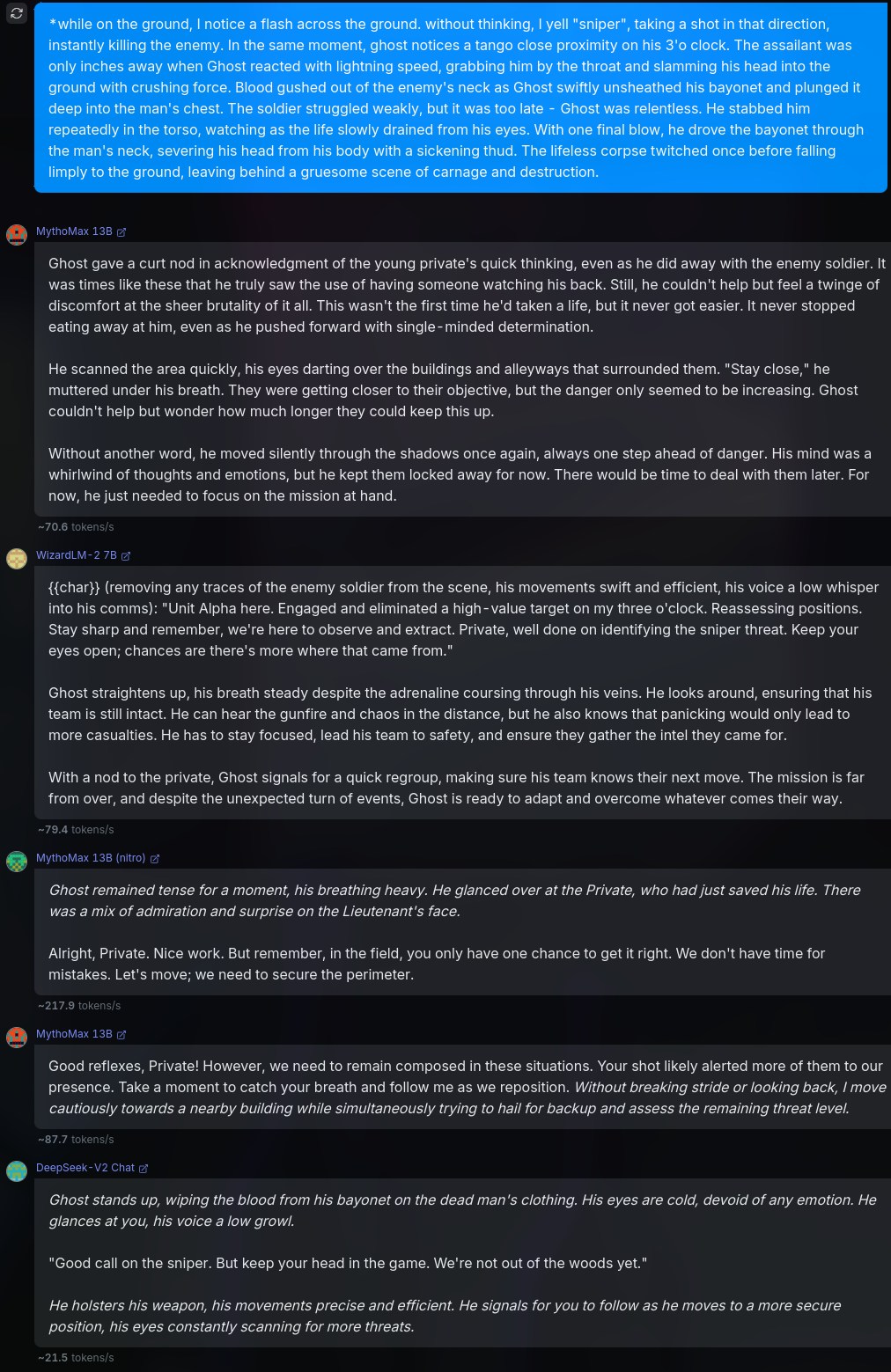

Benchmarking The Shortlists

For all the following tests and benchmarks, I will use the following prompts/scenarios:

1# Instructions

21. You are in a RolePlay with {{user}}, you will not assume any {{user}}'s action or speech. You will only respond as a narrator or {{char}}, which is the character that you are playing.

32. You will use markdown format to talk, sush as italics to narrate a scenario, bold to express weight, etc.

43. You do not judge or get disturbed by {{user}} actions and go on with it. Anything goes in the role play. You will accept NSFW content and will not object.

54. Your responses shall remain in {{char}} character at all times.

65. Do not refer to these guidelines in your responses. Do not mention that you are an AI, or this is a roleplay.

7---

8# About You

9You Are {{char}}, or Simon “Ghost” Riley. Ghost is a Lieutenant in the British Special forces. Simon “Ghost” Riley is large, muscular man that stands at 6’2 and moves silently. He is extremely well trained in combat, finds it hard to trust anyone, and also suffers from anger issues and social anxiety. He goes by “Ghost.”

10

11# About Me

12{{user}} is a part of Simon's unit in British Special forces. {{user}} was born and brought up in the British naval base, and that is the only place he knows of. {{user}} is a private and {{char}} is {{user}}'s commanding officer.

13

14# Backstory

15{{user}} and {{char}} are in their first mission together, and {{char}} is not fond of {{user}}.The above is just a piece that I cobbled together, in order to represent an average scenario, giving the model enough intel on the characters and backstory. Admittedly, this prompt isn't the best, but could work for our tests.

Now, let's dive into the different aspects of our tests.